How to build modern data applications with the SNOWFLAKE DATA CLOUD?

It is important to understand that snowflake is a single global unified system where you can access, analyze, ingest and really take action on your data. You can build your applications directly with the snowflake data cloud.

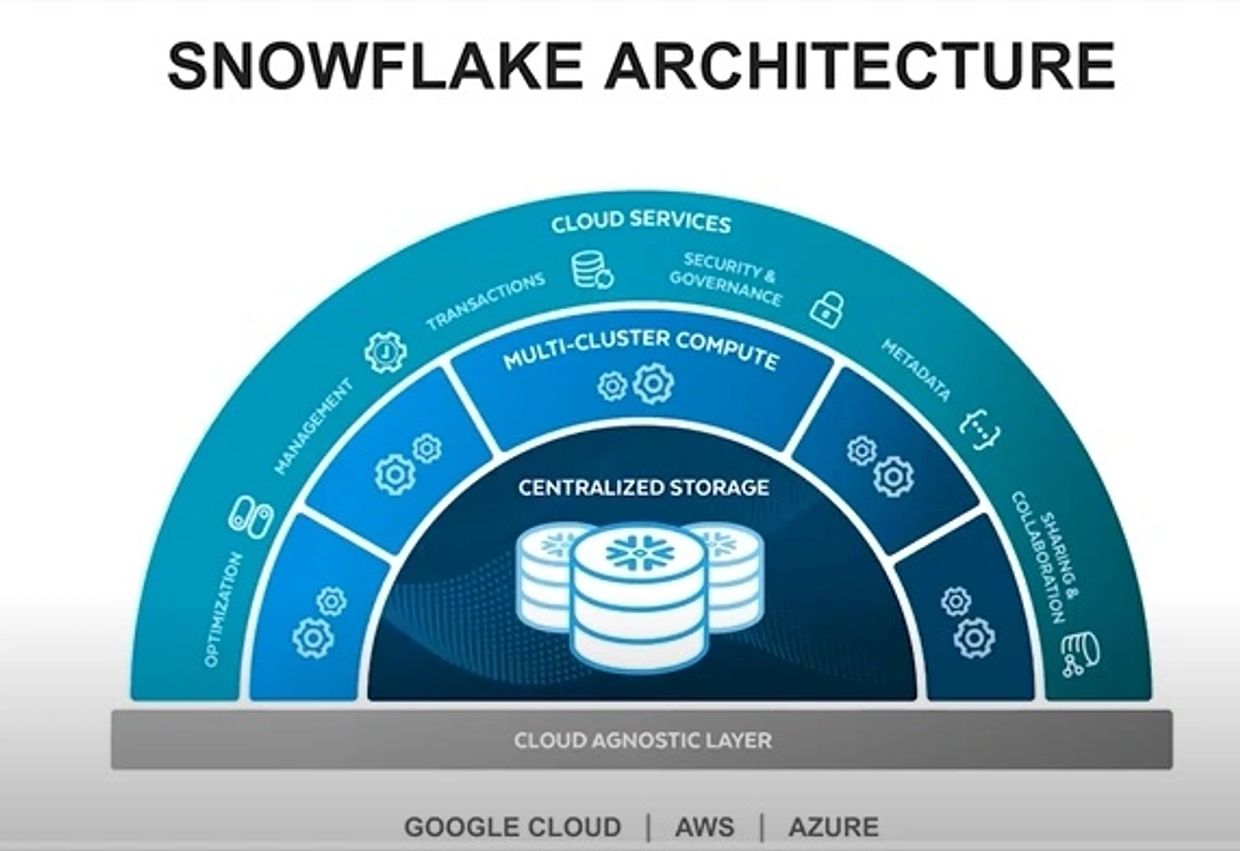

Now what actually powers the snowflake is snowflake's unique architecture at the basic level at the very basis, it is the cloud agnostic layer. What is cool about this is that not only snowflake is running on these major cloud providers but snowflake is actually using the native cloud providers, technologies and services when running on them. For example, if you are using snowflake's external functions capability, when you are actually doing that with an AWS Lambda or with a GCP cloud function. Snowflake has its multi-cluster compute and and centralized storage.

Centralized storage is where you really store any and all data that you want to analyze and access. It does not matter if it is structured data of some kind semi structure or even unstructured data. You store all there and it is independently scaled to the compute layer and what is really interesting and really powerful about Snowflake multi-cluster compute layer is that you can actually have separate compute for different workloads. For example, right you have one team that has very predictable workload but it needs to have very tight reliability and tight consistency on those query times. You can have and spin up compute dedicated to that team separate from another team so you can have not only cost optimazations between these different workloads but you can have this level of independent scalability across different teams which is really powerful.

THE CLOUD SERVICES

It is responsible for things like security and governance of all the metadata and query optimizations, even the things like the sharing and collaboration that Snowflake and its foundational core is capabale of through the data marketplace and even private shares amongst organizations.

BUILDING DATA APPS IS HARD

Today, Organizations have many problems with their data as in the following

A- Manual, costly Scaling

1- Growing customer / user base: Constantly hitting data volume and concurrency limits

2- Variable demand patterns: Spikes require 24x7 overprovisioning, hurting margins

3- Difficult to meet SLAs (A service-level agreement) : Must sclae to meet needs of largest customers, or isolate in separate DB

B- Complex Data Pipelines

1- Requires building / maintaining complex data pipelines: Increasing personnel, architecture costs

2- Extendes time to insight: Hours, days to analyze fresh data, poor customer experience

3- Teams need to have specialized skills, know properieray query languages: Expensive headcount

C-Oparational Burden

1- Growing customer / user base: Constantly hitting data volume and concurrency limits

2- Variable demand patterns: Spikes require 24x7 overprovisioning, hurting margins

3- Difficult to meet SLAs: Must sclae to meet needs of largest customers, or isolate in separate DB

How this work with in the context of building data apps?

Data apps themselves are increadibly data intensive, so the scaling of that can be very manual and costly at times. When you look as your application is growing the consumption of resources grow with it. A lot of times in order to meet those demands developers and organizations will have to either over provision to make sure that they can meet and keep up with that demand or they are going to have to try to manually segment out the scaling at a very precise level. Both are difficult to do without the right tools and platform in place. When you look at building data pipelines as your organization grows as your application grows, therefore the amount of data and where that data is stored these pipelines grow more and more complex and need to get and transform more data and be able to actually manage that overtime. Furthermore, when you actually have it up and running, it is not just like you can step away from it, you have still an operation burden to maintain this entire system regularly. You still have to be able to meet these SLAs which is one of the things that as your customers' demand and usage grows you will be able to have a process in place and tools in place to accurately and reliably meet those SLAs.

How does this coinside with a tradional architecture and some of the challenges?

A tradional architecure is going to have several different layers of your application:

1- The apps and services layer: This is really the actual app that is running on your mobile phone, your destop computer, maybe it the the web (E-commerce) application itself

2- The web or app tier: There is a rest API and this rest API is taking in the calls and essentially executing SQL statements to create update or delete data inside of a database. And each of these different tiers have different scaling requirements and different amounts of demand. So as more and more users are hitting your web and app tier that is going to quickly and significantly hit your database tier. You will have a certain level of concurrency that all of your users are trying to access the same time and data inside your database. As this scaling happens, the graded query performance is a very common thing and it is very costly to scale these different layers.

WHAT IS YOUR DATA SCALING PREFERENCE?

This is very common question and decision that organizations have to make today.

? Do they up front spend a lot of money to over provision their database tier so that they can meet the demand? Or

? Do they try and scale it with the actual demand that is coming in?

WHAT ARE THE BIGGEST CHALLENGES OF THE COMPANIES TODAY?

One of the biggest challenges with tradional archtitecture is that companies basicly building their infrastructure for their peak load. The difficulty of scaling out these database tiers is immense. These are technologies that were built decades ago, they are very difficult to add compute on demand. What happens is, once a quarter, once a year, they come through and they do estimates for a year from now and they look to see what is the next biggest set of servers they need to bring online are. The real concern, the next 11 or 12 months they are significantly over provisioned and they are running way more hardware than is necessary, jyst because of the difficulty to bring you on more and more servers. These systems are sometimes are quite difficult because these are no auto scaling in munites, they are auto scaling in hours or days often times and that is very different than cloud based solutions.

SNOWFLAKE ARCHITECTURE

Snowflake’s Data Cloud is powered by an advanced data platform provided as Software-as-a-Service (SaaS). Snowflake enables data storage, processing, and analytic solutions that are faster, easier to use, and far more flexible than traditional offerings.

The Snowflake data platform is not built on any existing database technology or “big data” software platforms such as Hadoop. Instead, Snowflake combines a completely new SQL query engine with an innovative architecture natively designed for the cloud. To the user, Snowflake provides all of the functionality of an enterprise analytic database, along with many additional special features and unique capabilities.

Data Platform as a Cloud Service

Snowflake is a true SaaS offering.

More specifically:

There is no hardware (virtual or physical) to select, install, configure, or manage.

There is virtually no software to install, configure, or manage.

Ongoing maintenance, management, upgrades, and tuning are handled by Snowflake.

Snowflake runs completely on cloud infrastructure. All components of Snowflake’s service (other than optional command line clients, drivers, and connectors), run in public cloud infrastructures.

Snowflake uses virtual compute instances for its compute needs and a storage service for persistent storage of data. Snowflake cannot be run on private cloud infrastructures (on-premises or hosted).

Snowflake is not a packaged software offering that can be installed by a user. Snowflake manages all aspects of software installation and updates.

Snowflake Architecture

Snowflake’s architecture is a hybrid of traditional shared-disk and shared-nothing database architectures. Similar to shared-disk architectures, Snowflake uses a central data repository for persisted data that is accessible from all compute nodes in the platform. But similar to shared-nothing architectures, Snowflake processes queries using MPP (massively parallel processing) compute clusters where each node in the cluster stores a portion of the entire data set locally. This approach offers the data management simplicity of a shared-disk architecture, but with the performance and scale-out benefits of a shared-nothing architecture.

Snowflake’s unique architecture consists of three key layers:

Database Storage

Query Processing

Cloud Services

Database Storage

When data is loaded into Snowflake, Snowflake reorganizes that data into its internal optimized, compressed, columnar format. Snowflake stores this optimized data in cloud storage.

Snowflake manages all aspects of how this data is stored — the organization, file size, structure, compression, metadata, statistics, and other aspects of data storage are handled by Snowflake. The data objects stored by Snowflake are not directly visible nor accessible by customers; they are only accessible through SQL query operations run using Snowflake.

Query Processing

Query execution is performed in the processing layer. Snowflake processes queries using “virtual warehouses”. Each virtual warehouse is an MPP compute cluster composed of multiple compute nodes allocated by Snowflake from a cloud provider.

Each virtual warehouse is an independent compute cluster that does not share compute resources with other virtual warehouses. As a result, each virtual warehouse has no impact on the performance of other virtual warehouses.

For more information, see Virtual Warehouses.

Cloud Services

The cloud services layer is a collection of services that coordinate activities across Snowflake. These services tie together all of the different components of Snowflake in order to process user requests, from login to query dispatch. The cloud services layer also runs on compute instances provisioned by Snowflake from the cloud provider.

Services managed in this layer include:

Authentication

Infrastructure management

Metadata management

Query parsing and optimization

Access control

Connecting to Snowflake

Snowflake supports multiple ways of connecting to the service:

A web-based user interface from which all aspects of managing and using Snowflake can be accessed.

Command line clients (e.g. SnowSQL) which can also access all aspects of managing and using Snowflake.

ODBC and JDBC drivers that can be used by other applications (e.g. Tableau) to connect to Snowflake.

Native connectors (e.g. Python, Spark) that can be used to develop applications for connecting to Snowflake.

Third-party connectors that can be used to connect applications such as ETL tools (e.g. Informatica) and BI tools (e.g. ThoughtSpot) to Snowflake.